Instagram had recently introduced a pop-up to alert users

for reconsidering their comments on posts. For this purpose, Instagram used AI

to detect potentially offensive or abusive comments.

According to Instagram, the result of the prompt was

positive and now the platform is expanding the same prompt for picture

captions.

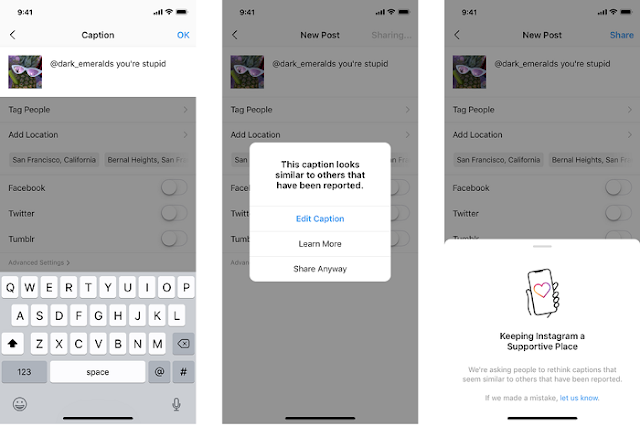

“Today, when someone writes a caption for a feed post, and

our AI detects the caption as potentially offensive, they will receive a prompt

informing them that their caption is similar to those reported for bullying.

They will have the opportunity to edit their caption before it’s posted.",

described Instagram.

This means that Instagram will not alert the user that the caption might be offensive but also notify the user that a similar caption has previously

been reported by others on the site.

This prompt won’t stop people from posting the intended

caption but it will give users a moment to reconsider by thinking about the

affects it might have.

Instagram does not clearly state how their measures to

prevent bullying have helped in the past but in a report, it did say:

“Content

actioned increased from 2.5 million pieces of content in Q2 2019 to 3.2 million

in Q3 2019, as we strengthened our enforcement of our policy on bullying and

harassment."

This is not the only effort Instagram

has made for the betterment of the mental health of its users, its attempt to

hide total likes count and introducing options like ‘restrict’ is an example

of such measures.

What we can learn from this is

that Instagram prioritizes the safety of its users allowing them to express

themselves safely.

The new prompt will be introduced

to users in selected countries for now but gradually become available worldwide

in a few months.